Quantum Hardware & Processors

1. what is Quantum Hardware & Processors?

Quantum Hardware & Processors refer to the physical components that make up quantum computers, enabling them to perform quantum computations. These components are based on quantum mechanical principles, such as superposition, entanglement, and quantum interference, which distinguish quantum computers from classical computers. While classical computers use bits as the basic unit of information (which can be 0 or 1), quantum computers use qubits, which can exist in multiple states simultaneously, thanks to superposition. The development of quantum hardware is essential for harnessing the power of quantum computing to solve complex problems in fields like cryptography, optimization, AI, and more.

Quantum processors are the core components that execute quantum algorithms. These processors manipulate qubits using various quantum operations, which are implemented as quantum gates, similar to how classical computers use logic gates (AND, OR, NOT) to process bits. A quantum processor consists of a network of qubits that interact with each other to perform calculations. The primary challenge in quantum hardware development is to maintain qubits' coherence, meaning they must retain their quantum state long enough to perform computations without being disturbed by environmental factors, such as heat or electromagnetic interference. Qubits are highly sensitive and prone to decoherence and quantum noise, making it a significant challenge to build stable, reliable quantum processors.

The development of quantum processors requires advanced engineering and material science, as well as new techniques for quantum error correction to ensure that quantum computations are reliable despite the challenges of decoherence and noise. Quantum error correction techniques are crucial because qubits are highly susceptible to errors due to their sensitivity to external disturbances, and without error correction, large-scale quantum computation would not be feasible.

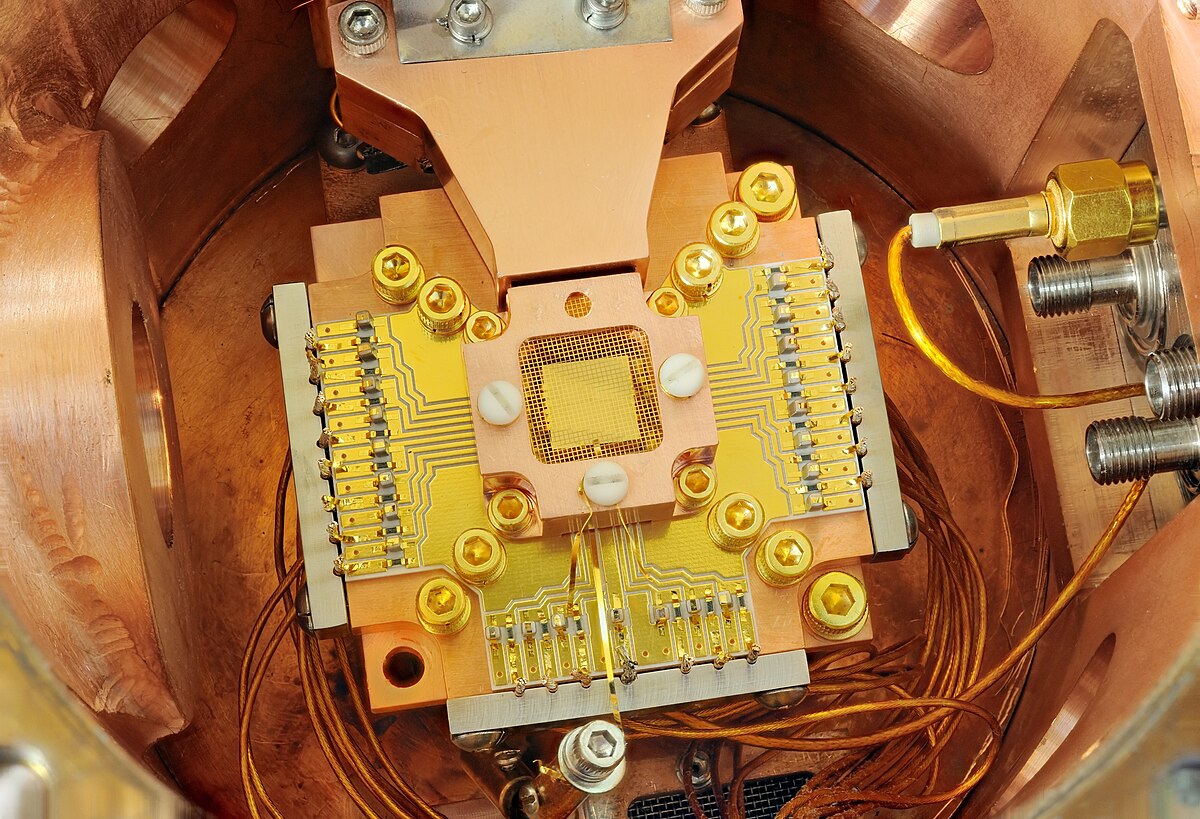

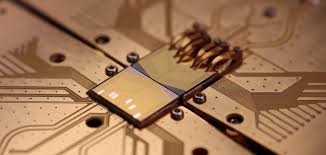

2.Superconducting Qubits

Superconducting Qubits

are one of the most widely studied and used types of qubits in quantum computing. They are based on superconducting circuits, which are electrical circuits made from superconducting materials that exhibit zero electrical resistance when cooled to extremely low temperatures. Superconducting qubits leverage the unique quantum properties of superconducting circuits to represent quantum bits (qubits), which are the fundamental units of information in quantum computers..

Superconducting qubits are typically formed using Josephson junctions, which are non-linear superconducting devices that allow the flow of supercurrent (a current that flows without resistance) under certain conditions. These junctions enable quantum phenomena such as superposition (the ability of a qubit to exist in multiple states simultaneously) and quantum interference (where the probability amplitude of different states can interfere with each other). The quantum states of the superconducting qubits are determined by the flow of current through these circuits, and they can be manipulated using microwave pulses.

However, superconducting qubits face significant challenges, primarily around decoherence and noise. Due to their sensitivity to environmental disturbances, qubits can lose their quantum properties over time, which is known as decoherence. This poses a major hurdle in achieving long and reliable quantum computations. Additionally, even though superconducting qubits are relatively stable compared to other quantum systems, they are still prone to quantum noise, which can cause errors in quantum computations. Overcoming these challenges requires advanced techniques in quantum error correction and better management of qubit stability. Companies like IBM, Google, and Rigetti are working on improving the coherence times of superconducting qubits and developing new strategies to mitigate these issues, with the goal of scaling quantum computers for practical use in fields like cryptography, optimization, and artificial intelligence.

3.Trapped Ion Quantum Computing

Trapped Ion Quantum Computing

is a type of quantum computing that uses individual ions (charged atoms) as qubits to perform quantum computations. These ions are trapped in place using electromagnetic fields and manipulated using lasers. The qubits in a trapped ion system are typically encoded in the internal energy levels of the ions, where the ions can exist in a superposition of states (0 and 1 simultaneously) and can be entangled with other ions to perform complex quantum operations. The manipulation of these ions using lasers allows quantum gates to be applied, which are essential for performing quantum computations.

The main advantage of trapped ion quantum computing is the high fidelity of the qubits. Trapped ions exhibit extremely long coherence times, which means that their quantum state can be preserved for a longer duration compared to other qubit technologies. This makes them highly reliable for performing quantum calculations without losing quantum information due to decoherence. Additionally, trapped ions are naturally isolated from environmental noise, which further contributes to their stability. Trapped ion systems also offer the potential for high-precision operations, making them suitable for implementing complex quantum algorithms.

However, scaling trapped ion quantum computers is one of the biggest challenges. While each individual ion can be manipulated with great precision, the system's scalability is limited by the ability to trap and control a large number of ions simultaneously. As the number of qubits increases, the complexity of laser systems, ion traps, and the control mechanisms required also grows, making it more difficult to manage larger quantum systems. Furthermore, the precision required for the lasers to manipulate the ions becomes more challenging as the system scales up. Despite these challenges, companies like IonQ and Honeywell are making significant strides in developing trapped ion quantum computers, aiming to overcome these hurdles and create more scalable and powerful quantum systems in the future.

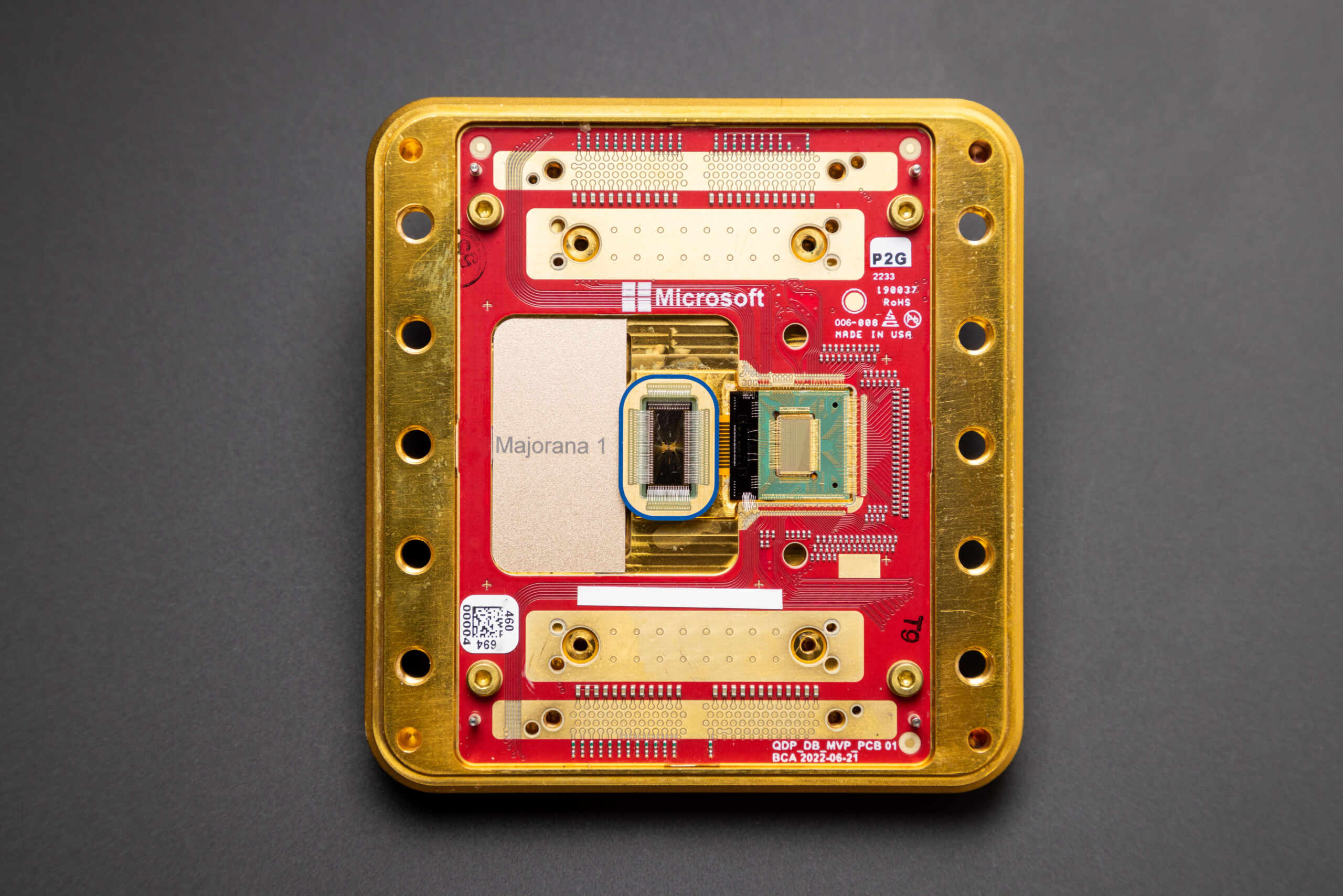

4.Topological Qubits

Topological Qubits

represent a promising and emerging approach to quantum computing that aims to address some of the significant challenges faced by other qubit technologies, such as error rates and decoherence. Unlike traditional qubits, which are typically based on the states of atoms or superconducting circuits, topological qubits are based on topologically protected states. These states are a result of the anyons, exotic particles that exist in two-dimensional systems. In a topological quantum computer, qubits are encoded in the quantum state of these anyons, which are robust against local disturbances and errors due to the topological properties of the system. This inherent error resistance is one of the major advantages of topological qubits, as they have the potential to be much less sensitive to environmental noise and decoherence.

The main advantage of topological qubits is their fault tolerance. Traditional qubits, such as those used in superconducting and trapped ion quantum computers, are prone to errors due to interactions with their environment, which can lead to decoherence and computational errors. Topological qubits, on the other hand, are less susceptible to these errors because their information is stored in a topologically protected manner. This means that they can theoretically be more stable over time, enabling quantum computers with topological qubits to perform longer computations without losing quantum information. Additionally, topological qubits can provide a platform for scalable quantum computing since error correction becomes less critical due to their inherent fault tolerance.

However, the development of topological qubits is still in its early stages, and many technical challenges remain. One of the biggest hurdles is the creation and manipulation of anyons in the lab. While the theoretical framework for topological quantum computing has been developed, experimental realization of topological qubits is extremely challenging. Research is still ongoing to create stable anyons and to devise methods for manipulating them to perform quantum operations. Companies like Microsoft, through its StationQ project, are heavily invested in topological qubits, aiming to create a more stable and scalable quantum computing platform. The promise of topological qubits lies in their ability to overcome many of the limitations faced by other qubit technologies, but significant research and development are required before they can become a mainstream technology in quantum computing.

5.Quantum Annealing

Quantum Annealing

is a quantum computing technique primarily used for solving optimization problems, where the goal is to find the best solution from a large set of possible solutions. It leverages the principles of quantum mechanics, particularly quantum superposition and quantum tunneling, to explore multiple solutions simultaneously. Quantum annealing works by representing an optimization problem as a mathematical model, where the solution corresponds to the lowest energy state of a system. The quantum system is then gradually evolved from a superposition of states to the desired solution, using quantum tunneling to escape local minima and reach the global minimum, which represents the optimal solution..

The key advantage of quantum annealing lies in its ability to solve optimization problems much more efficiently than classical algorithms, particularly for large and complex problems. In classical computing, optimization algorithms like simulated annealing rely on random exploration of the solution space, which can often get stuck in local minima (suboptimal solutions) rather than finding the global minimum. Quantum annealing, on the other hand, utilizes quantum tunneling to traverse these local minima, potentially speeding up the search for the global optimum. This makes quantum annealing particularly useful for problems in areas such as logistics, finance, machine learning, and material science, where optimization is a crucial task.

However, quantum annealing is not a general-purpose quantum computing method and is typically limited to solving specific types of problems, particularly those that can be formulated as optimization tasks. Unlike gate-based quantum computing, which can theoretically be applied to a wide range of problems, quantum annealing is designed for problems where finding the optimal solution is the primary goal. One of the most notable examples of quantum annealing technology is provided by D-Wave Systems, which has developed quantum annealers that are commercially available. These systems are used for real-world optimization problems, although they are still limited by the number of qubits and their susceptibility to noise. As quantum annealing technology evolves, researchers are working on scaling up these systems and improving their accuracy and efficiency.

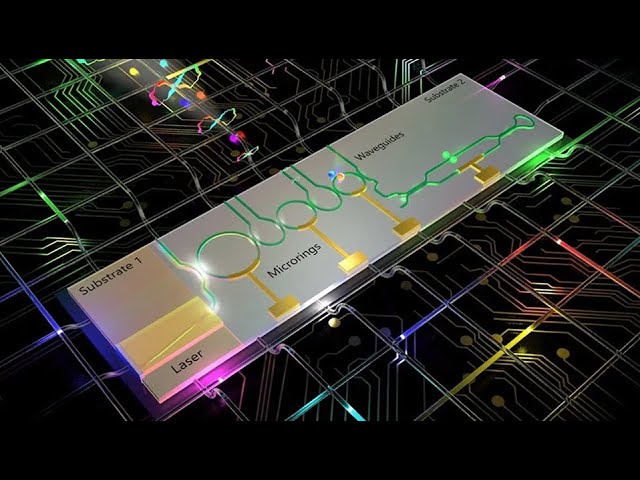

6.Photonic Quantum Computing

Photonic Quantum Computing

is an approach to quantum computing that uses photons (light particles) as the primary carriers of quantum information. In this system, quantum bits (qubits) are represented by the quantum states of photons, such as polarization, phase, or position. The primary advantage of using photons in quantum computing is that they naturally travel at the speed of light and are less prone to decoherence and noise compared to other qubit technologies like superconducting qubits or trapped ions. This makes photonic quantum computing a promising candidate for building large-scale, error-resistant quantum computers. Photons can be manipulated using various optical components like beam splitters, wave plates, and interferometers, allowing quantum gates to be applied to perform computations.

One of the key advantages of photonic quantum computing is the low decoherence rate of photons. Unlike other qubits, which require extremely cold environments and are sensitive to noise, photons can travel through optical fibers and free space with minimal loss of information. This makes photonic quantum computing more suitable for real-world applications, such as quantum communication and quantum networking, where long-distance information transfer is required. Additionally, photonic qubits can be easily integrated into existing optical communication infrastructures, making them a viable solution for quantum internet and secure communication networks. The use of photons also enables the possibility of parallel processing in quantum computations, as multiple photonic qubits can be processed simultaneously.

Despite its advantages, scalability is one of the biggest challenges for photonic quantum computing. While small-scale systems have been successfully demonstrated, scaling up the number of qubits and quantum gates remains a significant hurdle. Photons are inherently difficult to store and manipulate, and creating large networks of entangled photonic qubits requires precise control over individual photons and their interactions. Additionally, creating universal quantum gates for photonic qubits, which is necessary for general-purpose quantum computing, is still a work in progress. Companies like PsiQuantum and Xanadu are making significant progress in developing photonic quantum computing systems, with the goal of overcoming these scalability challenges. Photonic quantum computing has the potential to revolutionize fields such as quantum cryptography, machine learning, and optimization, but further research and technological advancements are required to make it a practical, large-scale solution.

7.Noise in Quantum Processors

Noise in Quantum Processors

is one of the primary challenges that quantum computing faces today. Quantum systems are highly sensitive to their environments, and even the slightest disturbance can introduce errors in the computation process. This phenomenon is referred to as "quantum noise." Unlike classical computing systems, where bits are either 0 or 1 and are relatively resistant to noise, quantum systems use qubits, which can exist in superposition states (both 0 and 1 simultaneously). The delicate nature of these quantum states makes them highly susceptible to noise, leading to decoherence and errors that can disrupt the processing of quantum information.

There are several types of noise that affect quantum processors, including decoherence and operational noise. Decoherence occurs when a qubit loses its quantum properties due to interactions with the external environment, such as electromagnetic radiation or fluctuations in temperature. This causes the qubit to collapse into a classical state, losing its superposition and entanglement. Operational noise, on the other hand, arises during quantum gate operations, where imperfections in the implementation of quantum gates can result in unwanted changes in the qubit state. These errors can accumulate over time and prevent accurate results in quantum computations. The combined effect of these noises limits the accuracy and scalability of quantum processors.

To address noise in quantum processors, various strategies are being developed, such as quantum error correction (QEC) and noise reduction techniques. Quantum error correction involves encoding quantum information in a way that allows errors to be detected and corrected without collapsing the quantum state. While QEC is highly effective, it requires a significant overhead in terms of additional qubits and computational resources, which makes it challenging for current quantum processors. Other methods, such as dynamical decoupling and feedback mechanisms, aim to actively counteract noise during quantum operations. Additionally, the development of fault-tolerant quantum computing aims to build quantum systems that can operate accurately despite noise. Overcoming noise remains a crucial hurdle for the practical deployment of quantum computers, and researchers are exploring a range of innovative techniques to improve the stability and reliability of quantum processors

8.Quantum Computing Scalability

Quantum Computing Scalability refers to the ability to increase the number of qubits in a quantum processor while maintaining or improving the performance, stability, and error correction of the system. One of the primary goals of quantum computing research is to build large-scale quantum computers that can perform complex computations that are infeasible for classical computers. Scalability is a critical factor because many quantum algorithms require a significant number of qubits to be effective, and as the number of qubits increases, the system must be able to manage these qubits efficiently without introducing excessive noise, decoherence, or errors. Achieving scalability is one of the biggest challenges in quantum computing, and it involves addressing several technical and physical limitations of quantum processors.

There are several challenges to scalability in quantum computing. As the number of qubits increases, the complexity of maintaining their quantum coherence also rises. Each qubit must be controlled, manipulated, and measured with precision, and this becomes increasingly difficult as the system grows. In addition, the interconnection between qubits (entanglement) must be preserved, which becomes more challenging as the number of qubits increases. Quantum error correction (QEC) is essential for ensuring the reliability of larger quantum systems, but implementing QEC requires additional qubits, further increasing the complexity of scaling. Moreover, the physical hardware required for quantum computers, such as cryogenic systems (for superconducting qubits) or vacuum chambers (for trapped ions), must be able to handle the demands of a larger qubit array without compromising performance.

To overcome these challenges, various approaches are being explored to improve quantum computing scalability. Modular quantum computing is one approach where smaller, more manageable quantum systems are linked together to form a larger, more powerful quantum processor. This can be achieved using techniques such as quantum communication or quantum networking, where qubits in different modules can be entangled and interact with each other remotely. Another promising approach is quantum error correction, which seeks to protect quantum information from noise and errors by encoding it across multiple qubits. However, effective error correction requires a significant number of physical qubits to represent a single logical qubit, increasing the number of qubits needed for practical computation. Researchers are also investigating new qubit technologies and quantum control methods that can increase the reliability, efficiency, and scalability of quantum processors. Despite the challenges, advances in quantum algorithms, hardware, and error correction are steadily moving the field closer to building large-scale, fault-tolerant quantum computers.

Quantum Computing Scalability refers to the ability to increase the number of qubits in a quantum processor while maintaining or improving the performance, stability, and error correction of the system. One of the primary goals of quantum computing research is to build large-scale quantum computers that can perform complex computations that are infeasible for classical computers. Scalability is a critical factor because many quantum algorithms require a significant number of qubits to be effective, and as the number of qubits increases, the system must be able to manage these qubits efficiently without introducing excessive noise, decoherence, or errors. Achieving scalability is one of the biggest challenges in quantum computing, and it involves addressing several technical and physical limitations of quantum processors.

There are several challenges to scalability in quantum computing. As the number of qubits increases, the complexity of maintaining their quantum coherence also rises. Each qubit must be controlled, manipulated, and measured with precision, and this becomes increasingly difficult as the system grows. In addition, the interconnection between qubits (entanglement) must be preserved, which becomes more challenging as the number of qubits increases. Quantum error correction (QEC) is essential for ensuring the reliability of larger quantum systems, but implementing QEC requires additional qubits, further increasing the complexity of scaling. Moreover, the physical hardware required for quantum computers, such as cryogenic systems (for superconducting qubits) or vacuum chambers (for trapped ions), must be able to handle the demands of a larger qubit array without compromising performance.

To overcome these challenges, various approaches are being explored to improve quantum computing scalability. Modular quantum computing is one approach where smaller, more manageable quantum systems are linked together to form a larger, more powerful quantum processor. This can be achieved using techniques such as quantum communication or quantum networking, where qubits in different modules can be entangled and interact with each other remotely. Another promising approach is quantum error correction, which seeks to protect quantum information from noise and errors by encoding it across multiple qubits. However, effective error correction requires a significant number of physical qubits to represent a single logical qubit, increasing the number of qubits needed for practical computation. Researchers are also investigating new qubit technologies and quantum control methods that can increase the reliability, efficiency, and scalability of quantum processors. Despite the challenges, advances in quantum algorithms, hardware, and error correction are steadily moving the field closer to building large-scale, fault-tolerant quantum computers.

Comments